Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature currently requires accessing the site using the built-in Safari browser.

-

Follow us on Twitter @buckeyeplanet and @bp_recruiting, like us on Facebook! Enjoy a post or article, recommend it to others! BP is only as strong as its community, and we only promote by word of mouth, so share away!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Technology Gone Wild: Rise of the Machines

- Thread starter 5 STAR U-G-A

- Start date

This was almost a good video... 'cept we could use a little more chick running the saw.

How about chick on chainsaw powered super bike?

Upvote

0

5 STAR U-G-A

Legend

This was almost a good video... 'cept we could use a little more chick running the saw.

How about chick on chainsaw powered super bike?

Agree completely. The thumbnail suggested that the chick would be using it more, or primarily using it. It was very disappointing in that regards.

Regarding the photo, now that's a piece of equpiment I'd certainly rise for. The chainsaw powered bike is pretty cool too.

Last edited:

Upvote

0

5 STAR U-G-A

Legend

Looking at the date this tweet was published, I'm really hoping this is an April Fools Joke.

EDIT: Nope, it's real.

‘Magnetic turd’: scientists invent moving slime that could be used in human digestive systems

Researcher who co-created substance says it is not an April fool’s joke and they hope to deploy it like a robot

https://www.theguardian.com/science...that-could-be-used-in-human-digestive-systems

Scientists have created a moving magnetic slime capable of encircling smaller objects, self-healing and “very large deformation” to squeeze and travel through narrow spaces.

The slime, which is controlled by magnets, is also a good electrical conductor and can be used to interconnect electrodes, its creators say.

The dark-coloured magnetic blob has been compared on social media to Flubber, the eponymous substance in the 1997 sci-fi film, and described as a “magnetic turd” and “amazing and a tiny bit terrifying”.

Prof Li Zhang, of the Chinese University of Hong Kong, who co-created the slime, emphasised that the substance was real scientific research and not an April fool’s joke, despite the timing of its release.

The slime contains magnetic particles so that it can be manipulated to travel, rotate, or form O and C shapes when external magnets are applied to it.

The blob was described in a study published in the peer-reviewed journal Advanced Functional Materials as a “magnetic slime robot”.

“The ultimate goal is to deploy it like a robot,” Zhang said, adding that for the time being the slime lacked autonomy. “We still consider it as fundamental research – trying to understand its material properties.”

The slime has “visco-elastic properties”, Zhang said, meaning that “sometimes it behaves like a solid, sometimes it behaves like a liquid”.

It is made of a mixture of a polymer called polyvinyl alcohol, borax – which is widely used in cleaning products – and particles of neodymium magnet.

“It’s very much like mixing water with [corn] starch at home,” Zhang said. Mixing the two produces oobleck, a non-Newtonian fluid whose viscosity changes under force. “When you touch it very quickly it behaves like a solid. When you touch it gently and slowly it behaves like a liquid,” Zhang said.

While the team have no immediate plans to test it in a medical setting, the scientists envisage the slime could be useful in the digestive system, for example in reducing the harm from a small swallowed battery.

“To avoid toxic electrolytes leak[ing] out, we can maybe use this kind of slime robot to do an encapsulation, to form some kind of inert coating,” he said.

The magnetic particles in the slime, however, are toxic themselves. The researchers coated the slime in a layer of silica – the main component in sand – to form a hypothetically protective layer.

“The safety [would] also strongly depend on how long you would keep them inside of your body,” Zhang said.

Zhang added that pigments or dye could be used to make the slime – which is currently an opaque brown-black hue – more colourful.

Upvote

0

5 STAR U-G-A

Legend

I know, I know. Buzzfeed. But it's actually pretty interesting and informative.

This Texas Town Was Deep In Debt From A Devastating Winter Storm. Then A Crypto Miner Came Knocking.

A 2021 winter storm overwhelmed Denton's power grid, pushing the city into crushing debt. Then a faceless company arrived with a promise to refill its coffers — and double its energy use.

Sarah Emerson

https://www.buzzfeednews.com/article/sarahemerson/denton-texas-crypto-miner-core-scientific

Service trucks line up after a snowstorm on Feb. 16, 2021, in Fort Worth, Texas. Winter storm Uri brought historic cold weather and power outages to Texas as storms swept across 26 states with a mix of freezing temperatures and precipitation.

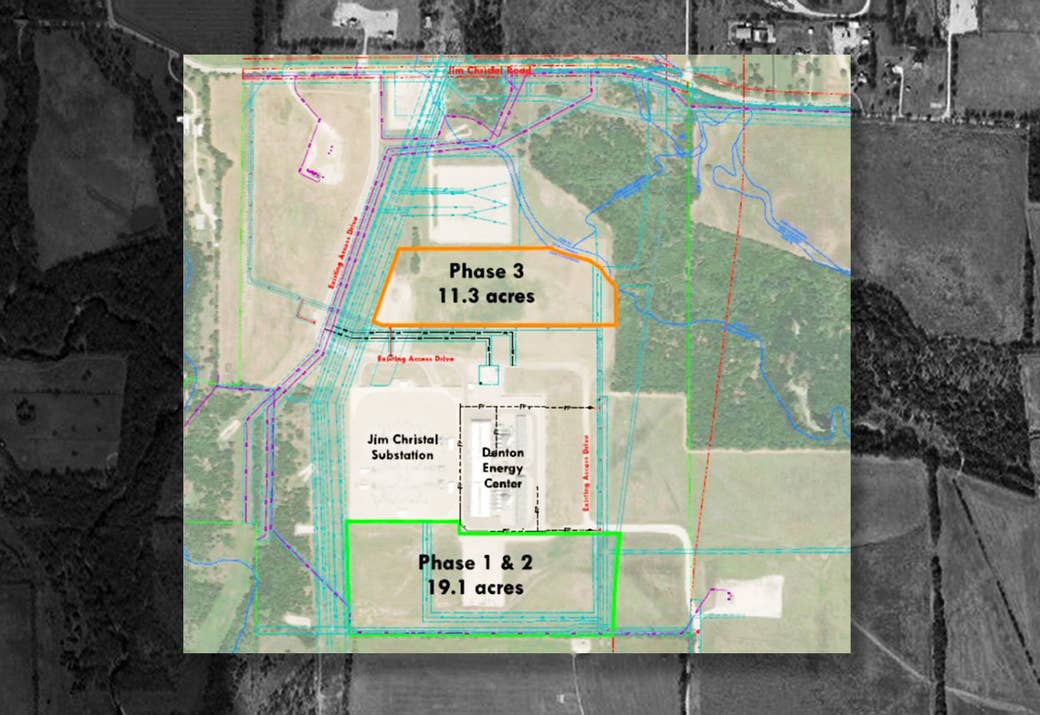

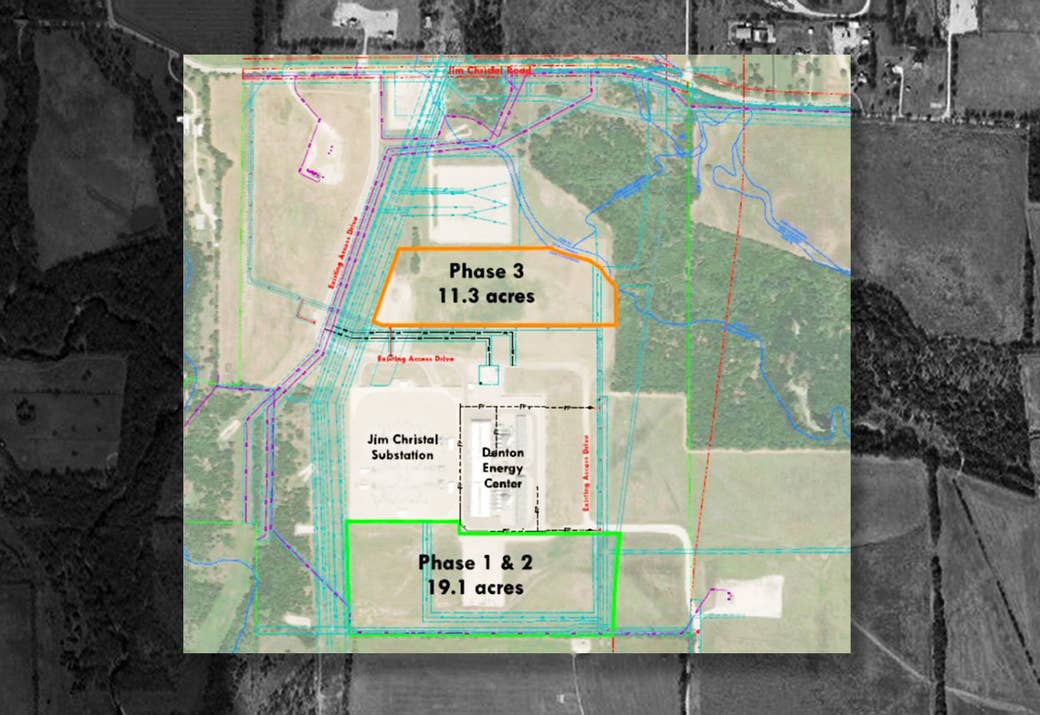

A visualization of the Core Scientific cryptocurrency mine at Denton’s natural gas power plant.

Core Scientific has leased roughly 30 acres of land at the Denton Energy Center.

This Texas Town Was Deep In Debt From A Devastating Winter Storm. Then A Crypto Miner Came Knocking.

A 2021 winter storm overwhelmed Denton's power grid, pushing the city into crushing debt. Then a faceless company arrived with a promise to refill its coffers — and double its energy use.

Sarah Emerson

https://www.buzzfeednews.com/article/sarahemerson/denton-texas-crypto-miner-core-scientific

Last February, a disastrous winter storm pummeled Texas with ice and snow, threatening to topple the Texan energy grid. In the city of Denton, neighborhoods blinked off and on as the local power provider tried to conserve electricity. Places like assisted living facilities were momentarily excluded from the blackouts, but those eventually went dark too. Then the gas pipelines froze, and the power plant stopped working. Over the next four days, the city bled more than $200 million purchasing energy on the open market. It would later sue the Electric Reliability Council of Texas, the grid operator also known as ERCOT, for saddling Denton with inflated energy prices that caused it to accrue $140 million in debt.

“While we were in an emergency, ERCOT allowed prices to go off the scale,” Denton City Council Member Paul Meltzer told BuzzFeed News. “We were forced to pay. We were approached Tony Soprano–like to empty our reserves.”

So when a faceless company appeared three months later, auspiciously proposing to solve Denton’s money problems, the city listened. A deal was offered. In exchange for millions of dollars in annual city revenue, enough to balance its ledgers and then some, Denton would host a cryptocurrency mine at its natural gas power plant. And not just any mine — a massive data center that would double Denton’s energy footprint so that rows upon rows of sophisticated computers could mint stockpiles of bitcoin, ether, and other virtual currencies. To officials who had simply tried to keep the lights on when conditions became deadly, the unexpected infusion seemed a relief.

But the thought of becoming a crypto town was a bitter pill for some to swallow.

“It was super shady just the way it all came about because none of the elected officials around here have ever talked about crypto, and suddenly we’re renting out space near the power plant that’s gonna use as much energy as the whole city consumes,” said Denton resident Kendall Tschacher, who told BuzzFeed News that by the time he learned about the crypto project, it was all but a done deal.

During preliminary discussions with Denton, the crypto miner was simply referred to as the “client,” its name conditionally withheld from the public on the order of Tenaska Energy, a natural gas marketer that negotiated the multimillion-dollar deal. Its name was finally disclosed in local government meetings in August: The miner moving into Denton was Core Scientific, a company cofounded in 2017 by one of the lesser known creators of Myspace, Aber Whitcomb. Headquartered in Austin, the company went public in January through an estimated $4.3 billion SPAC merger.

“The very existence of crypto seems totally stupid to me.”

A bitcoin farm on a natural gas plant is a fitting metaphor for Texas. From Austin to Abilene, crypto prospectors are staking claim to the electrical grid, hungry for cheap power and unique incentives that can effectively pay them to be there. Republican lawmakers have declared the state “open for crypto business,” gambling the industry will in turn buttress a failing fossil fuel economy and meet demands for more clean energy production. Crypto enterprises are now amassing vast amounts of energy that, they claim, can be redistributed to the grid when power reserves are needed most. Companies with valuations 100 times greater than the revenue of local cities are funding schools, sustainability projects, and discretionary spending coffers. And at the smallest levels of government, crypto operations are somehow charming communities who don’t really seem to want them there.

In Denton, the deal’s secretive nature and concerns about the mine’s colossal energy requirements inspired some council members to openly oppose the facility — at least at first.

“The very existence of crypto seems totally stupid to me,” Meltzer said.

Yet after months of deliberation in open and closed-door meetings, the deal was near-unanimously approved. Officials like Meltzer, who’d previously challenged the project’s environmental commitments, began assuring residents that it was the right choice for Denton.

Denton’s story is a tale of how a small town government embraced something to which it first seemed ideologically opposed. It’s a schematic for how the burgeoning crypto industry — which promises to solve climate change, repair crumbling infrastructure, and fix all manner of societal issues by putting them on the blockchain — is literally remaking communities, hedging their futures on its own unforeseeable success.

When put to a vote last August, Denton’s city council approved the mine, which quietly came online last month. Only one council member, Deb Armintor, voted against it. Denton Mayor Gerard Hudspeth said in an October press release that the city is “honored to have been selected as the site of Core Scientific’s first blockchain data center in Texas.” He did not respond to a request for comment; Core Scientific declined to answer a detailed list of questions.

Crypto is a polarizing subject, either the genesis of “world peace” or the thing that’s going to ruin the world, depending on who’s talking. But those prevailing narratives ultimately fell flat in Denton, where crypto is a means to various ends. Like the industries of yore that shaped countless small towns across the nation, crypto companies are hoping to secure their futures by fulfilling the needs of the communities they invade. What happened in Denton, a smallish city of nearly 140,000 people, remains one of the clearest examples of how those communities are sometimes compelled to answer when crypto comes knocking at their door.

Terry Naulty stood at a dais in Denton’s city hall during an August city council meeting. “Without approval, these funds will not be available,” he told Denton’s city council, public utilities board, and planning and zoning commission in an attempt to convince them that Denton would be better off taking the money the Core Scientific deal offered.

As the assistant general manager of Denton Municipal Electric (DME), the city’s owned and operated power utility, Naulty was part of an ad hoc project team advocating for the Core Scientific deal. By the first of these hearings in June, the team had already been in talks with Tenaska for a month about selling the mine to city officials.

Core Scientific pledged a capital investment of $200 million to the project, according to recordings of city council meetings. Dozens of modular mining containers, roughly the shape and size of a semitrailer truck, would connect to the gas plant’s existing power infrastructure, a driving factor in why the company chose Denton.

On a presentation slide, rows of six-figure numbers listed the revenue that Denton could reap should council members greenlight the project. At full buildout in 2023, DME stands to earn between $9 million and $11 million annually from taxes and fees. A portion of that revenue would be transferred to the city’s general fund to be used at council members’ discretion, allowing them to pursue sustainability initiatives currently out of reach.

For DME, said Naulty, this net income “would offset the cost of Winter Storm Uri,” offering Denton the opportunity to get back in the black after accumulating $140 million in debt.

“People have their power shut off just for being too poor to pay their bills.”

Last February, millions of Texans lost power for days after freezing temperatures brought on by the winter storm pushed the ERCOT grid within minutes of total collapse. At least 750 people died as a result, according to a BuzzFeed News analysis. Neglected energy infrastructure, a lack of power reserves, and the repercussions of a deregulated market were blamed in the aftermath. Republicans like Gov. Greg Abbott cried “green energy failure,” though in truth all production was curtailed, and natural gas suffered catastrophically. Since Texas’s grid is an island, unable to draw power from neighboring states, both customers and utilities were literally left in the dark.

That week, DME spent approximately $210 million purchasing electricity from ERCOT, almost as much as it had paid in the previous three years combined. Whereas energy rates in February 2020 were $23.73 per megawatt hour, they spiked as high as $9,000 during the storm. The city had no choice but to buy power at jaw-dropping wholesale rates.

The alternative to approving the Core Scientific deal, Naulty said, would be to raise rates for DME customers by 3%. Without the crypto mine to cover the blackout’s costs, the city’s residents would have to pay for ERCOT’s failures, amounting to an additional $3.75 per month on the average customer’s bill.

Armintor remarked that even a small rate increase can be a real burden for struggling locals. “People have their power shut off just for being too poor to pay their bills,” she said.

Denton is a green blip in a sea of red. Eight years ago, local activists mustered enough community support to ban fracking in the city, the first ordinance of its kind in a state that’s the country’s number one oil and gas producer. (Months later, fossil fuel lobbyists succeeded in overturning the rule.) In 2020, Denton began offsetting 100% of its electrical footprint through renewable energy contracts; it is the only city in Texas that currently does so.

In public meetings, Naulty assured Dentonites that for the Core Scientific crypto mine, the energy used would be “100% green.” One project document claimed that putting the mine in Denton “supports carbon emissions reduction on a global basis.” It approximated the mine’s carbon footprint in China would equal 2 million tons, and on the ERCOT grid, it would total 1.1 million tons. But because the project is in Denton, the document claims, the mine’s footprint factors to zero, owing to renewable energy offsets.

But how could such a large mine, one that will consume the same amount of electricity as the city itself, ever consider itself green? That question led some council members, such as Alison Maguire, to initially oppose the deal.

“I do want us to be cautious about the idea that we can use as much energy as we want as long as it’s renewable,” Maguire said during a June hearing.

Core Scientific’s claims are based on its plan to offset its emissions using renewable energy credits, or RECs. These are credits produced by clean energy projects such as wind farms and solar arrays. One REC equals one megawatt hour of renewable energy added to the ERCOT grid. Customers purchasing RECs aren’t acquiring that electricity directly, but rather the proof that the same amount of electricity had, somewhere and at some point, been created sustainably.

Think of it like this: Blake in Oakland dumps 10 gallons of trash into the ocean. He feels bad, and pays Sam in San Francisco because she removed 10 gallons of trash from her side of the bay last month. The net result is that zero gallons of garbage were added to the ocean, yet Blake’s old takeout is still floating somewhere across the Pacific, and Sam would have removed that trash from the ocean even without Blake’s compensation.

In a city council meeting, Tenaska said it would be supplying the RECs. It did not respond to repeated requests for comment.

In the June hearing, Maguire noted that purchasing RECs was “certainly a lot better than powering through fossil fuels,” but she appeared to be unconvinced by the deal’s merits. In a July Facebook post, Maguire urged her constituents to speak out against the mine. “We don’t need to allow an unidentified company to use our city’s infrastructure to increase greenhouse gas emissions for the sole purpose of turning a profit,” she wrote. (Ultimately, like most other council people who expressed fears about the project’s sustainability promises, Maguire voted in favor of the mine. She did not respond to repeated requests for comment.)

Maguire’s caution was not unfounded. RECs are a controversial accounting strategy used by large companies like Amazon and Google to “decarbonize” their facilities. While proponents say that greater demand for these credits will incentivize more clean energy production, climate policy regulators warn that offsets aren’t a replacement for directly contracting with renewable power projects or reducing energy use entirely.

Yet crypto companies, facing pressure externally and internally to address their environmental harms, are nevertheless turning to credits as a stopgap measure. In a January update, Core Scientific announced that 100% of its carbon emissions were offset through RECs.

In addition, DME general manager Tony Puente said that Core Scientific will be purchasing RECs already in existence for the Denton mine, which means the project will not be directly contributing to the creation of new renewable energy. Core Scientific declined to answer questions about its use of RECs.

“Crypto is increasing demand and we need to reduce demand,” said Luke Metzger, who leads the advocacy group Environment Texas. “If [Core Scientific] is just purchasing RECs, then no, they’re not in fact supporting the development of new renewables.”

But the calculus was enough to convince some council members that the project would in fact be green. “If you’re at all serious about climate impact and if you accept the fact that [the mine] is going to be built, you are doing massively better for the environment by having it in Denton,” Meltzer told BuzzFeed News.

Core Scientific’s use of RECs is different from how Denton supports renewables: The city has long-term agreements with solar and wind projects to bring new clean energy onto the grid.

“Crypto is increasing demand and we need to reduce demand.”

“It would be dirty 10 miles down the road, and you can argue it’s still dirty here, but all the electrons we buy are clean electrons,” Council Member Brian Beck told BuzzFeed News.

Even though he supported the deal, Beck echoed the concerns of environmental critics, saying that RECs are “not quite the market push as buying direct power agreements.” But regardless, Beck insisted the project will correct ERCOT’s “pee to pool water” ratio of renewables to fossil fuels. Meltzer and Beck confirmed that in the future, council members can choose to pursue a direct power agreement over RECs for the mine’s energy.

But RECs have their benefits too, because the crypto project’s unknown lifespan carries certain risks. Puente said he didn’t want Denton to enter a long-term contract to supply the mine with renewable energy “and then this company leaves tomorrow.” While Core Scientific has the option to extend its agreement with Denton for up to 21 years, even pitch documents asked, “What happens when it’s over”?

Metzer was also swayed to support the deal because he says up to $1.2 million of annual revenue could finance a sustainability fund, something Denton had never been able to do in previous fiscal years. During one city council meeting, a slide proposed that this money could be used for initiatives like creating a Denton climate action plan, installing air quality monitoring stations throughout the city, and weatherizing the homes of low-income residents.

Council members told BuzzFeed News that they were able to push for some changes to the 138-page power purchase agreement that governs the relationship between the city and Core Scientific. After reading a draft of the agreement, council members said they included a mandate that the mine be cooled by air chillers as opposed to water cooling systems, preventing the mine from accessing Denton’s water reserves (a resource that traditional data centers have guzzled in other towns).

Armintor, the only council member who voted against the deal, was dissatisfied with its terms and rebuked Core Scientific’s offer in a September Facebook post. “I would’ve thought we’d all have been totally averse to crypto in Denton, and I think that was people’s first response,” Armintor told BuzzFeed News. But she believes her fellow council members were persuaded to approve the project because of the “promise of money it might generate.”

Armintor suggested that funds for the sustainability initiatives could have been reappropriated from Denton’s police department or Chamber of Commerce. “My argument is that we’re legislators, we can do this by reallocating money we already have so that we don’t have to make a deal with the devil,” she said.

Denton is a microcosm of global changes that are transforming the US into Eden for crypto miners. Miners infamously crept into states like Washington and New York as early as 2017, but it wasn’t until China declared a sweeping ban on bitcoin mining last year that America became a crypto superpower. The US now hosts 35% of bitcoin’s hashrate, more than any other country in the world, and half of the largest crypto companies.

As a result of “the Great China Unplugging, lots of big companies are redomiciling their operations here and are looking at Texas as a base,” John Belizaire, founder and CEO of crypto mining company Soluna Computing, told BuzzFeed News. Soluna has invested in a 50-megawatt mine currently under development in Silverton.

This momentum propelled Tenaska, like many other oil and gas companies, into the crypto landscape. The company revealed in negotiations with Denton that it is managing the energy needs of miners in other regions in Texas and Montana. In Texas, Tenaska has aligned itself with the Texas Blockchain Council, a powerful lobbying group that helped to pass two state blockchain bills last year. Both Tenaska and Core Scientific are listed as Texas Blockchain Council partners.

By the end of 2022, ERCOT is expected to provide power for 20% of the bitcoin network thanks in part to political support. Miners in Texas will require twice the power of Austin, a city of 1 million people. To a cluster of conservative lawmakers including Sen. Ted Cruz, crypto has become a convenient vector for US nationalism.

“Inheriting the hashrate from China is seen as a good thing because not only is America bringing a profitable industry over from China, but China is now also ‘anti-freedom’ for outlawing cryptocurrency,” said Zane Griffin Talley Cooper, a doctoral candidate at the University of Pennsylvania who studies crypto’s interaction with energy infrastructure.

These dynamics weren’t wasted on Denton’s officials. “What we have essentially is a runaway teen from Asia that we are fostering,” Beck said during an August hearing. “We have the opportunity here not just to foster that child, but to be an adult in the room.”

However, council members had to make a leap of faith when it came to crypto’s most ambitious claim. Across the nation, companies like Core Scientific are promising to strengthen local grids by devouring huge amounts of energy. It sounds contradictory, but to bitcoin evangelists like Belizaire, crypto’s energy demands are a “feature, not a bug.”

It’s a sales pitch that relies on two largely untested assumptions: One, that crypto’s energy requirements will stimulate new clean energy production. And two, that miners will respond to the grid’s larger needs, powering down when energy demands are at their highest.

“There are grains of truth in the things I’m hearing, but it sometimes gets overtaken by hyperbole and rhetoric,” energy analyst Doug Lewin told BuzzFeed News. Though Lewin doesn’t believe that bitcoin is a vehicle to a clean energy future, he theorized that mines could serve a beneficial purpose under a specific set of conditions.

“What we have essentially is a runaway teen from Asia that we are fostering.”

For example, wind and solar farms in West Texas can be forced to “curtail” their production due to a lack of adequate transmission infrastructure and battery storage capacity. Even on the windiest or sunniest of days, if power lines can’t carry those electrons across the ERCOT grid, operators have no choice but to reduce their energy output. A crypto mine built adjacent to these farms could conceivably power its operations on excess renewable energy alone. Such solutions have been endorsed by people like Square CEO Jack Dorsey. Last year, Square commissioned a whitepaper titled “Bitcoin Is Key to an Abundant, Clean Energy Future.” True believers in bitcoin’s utility have even adopted the phrase “bitcoin as a battery.”

“You’re not a battery, you don’t store energy,” Lewin said.

Core Scientific also pledged to modulate its power use, purchasing energy but also distributing it back onto the grid. For a city still recovering from last year’s outages, this was a crucial selling point. If the mine “is ready to produce two Dentons’ worth of power, and we start to have grid instability issues, you turn off the data center,” Beck explained.

“Interruptibility” isn’t new or unique to crypto. Austin semiconductor plants idled during the February 2021 winter storm, and ERCOT works with other large facilities during emergency events to reduce their demands. But miners believe they stand alone in being able to power down within seconds without harming their operations.

While ERCOT did not comment for this story, ERCOT chief Brad Jones has endorsed crypto as a grid partner and said he’s personally “pro bitcoin.”

Project documents claim that Core Scientific’s data center will shut down “during high price periods or scarcity of generation events or higher than forecasted load.” In practice, Core Scientific will base its daily operations — when to run, and at what capacity — on variable ERCOT market rates. If it feels that prices are too high to mine, it can choose to power down.

According to Puente, Core Scientific has also entered a confidential agreement with ERCOT to curtail its energy use during peak demand. For example, during another extreme weather event, ERCOT may require the mine to stop running and, in return, will pay Core Scientific for the energy it frees up on the grid at a profit to the crypto company. In other words, Core Scientific is either saving money or making money if it powers down. Should it refuse to do so, “we will automatically open up their breaker to shut off power to them, if needed,” Puente said.

“You’re not a battery, you don’t store energy.”

Tenaska and ERCOT did not respond to numerous requests for comment about how this arrangement works. Core Scientific declined to comment on all aspects of its operations in Denton. So it’s unclear when, exactly, the mine can be mandated to power down, and whether ERCOT has legal recourse if Core Scientific does not comply. The city’s contract with Core Scientific states that DME “shall have the right, but not the obligation” to force compliance, and that DME is not liable for any penalties if Core Scientific fails to curtail its energy use. A portion of these terms is redacted.

Skeptics have also warned that the unpredictable price of cryptocurrency could be a more compelling factor than market force and community needs. In Chelan County, Washington, one of the first US communities to host a crypto diaspora, miners elected to run continuously and its local power utility “did not receive serious modulation offers.”

But things may have shifted now that crypto companies face increasing public accountability. When a cold front descended on Texas in February, large miners such as Riot Blockchain and Rhodium Enterprises curtailed their energy consumption by up to 99%, and a host of other miners also voluntarily powered down.

“We are proud to help stabilize the grid and help our fellow Texans stay warm,” Rhodium’s CEO tweeted.

Three hours off, one hour on. During the rolling blackouts of 2021’s deadly winter storm, people in Denton scrambled to heat their homes and charge their devices whenever power momentarily returned. Daniel Hogg, a Denton resident of 12 years, told BuzzFeed News that he was “piled up with my dogs and lots of blankets” before evacuating to a friend’s house in unaffected Lewisville.

On Facebook and Reddit, with the memory of that storm still fresh in their minds, some residents have accused the city of agreeing to a losing deal. Hogg is among the locals unconvinced by crypto’s claims, and believes the city council should have done more to involve residents in their decision-making process. Though citizens could attend public hearings, Hogg said they weren’t well publicized. He wasn’t aware the deal was even happening until after officials had already voted to approve it.

“It’s so weird the way everything was done so secretively,” Hogg said.

Beck and Meltzer disagreed with the assertion that council members didn’t solicit public input. “People are as involved as they want to be,” Meltzer said.

While the city council was made aware of Core Scientific’s identity in private, members were prohibited from saying its name out loud in early hearings. Last August, Armintor, who voted against the deal, told the project team that these nondisclosure terms, by which both council members and city employees were bound, “make me uncomfortable.”

“It’s so weird the way everything was done so secretively.”

Hogg first learned of the deal through a September Dallas Morning News Watchdog story by reporter Dave Lieber. The story was first to note that chunks of the city’s 138-page power purchase agreement were redacted to conceal ERCOT figures, construction deadlines, and infrastructure details. The contract also required that “neither Party shall issue any press or publicity release or otherwise release, distribute or disseminate any information,” though Core Scientific would eventually publish a joint statement on the deal last October. Core Scientific declined to provide BuzzFeed News with an unredacted agreement or comment on the deal’s secrecy.

Even now, after some project details have been released, the document remains redacted under Texas public information law. Puente and Beck told BuzzFeed News that the agreement was written by a team of city staffers, Denton lawyers, and Tenaska and Core Scientific representatives.

“I don’t think people understand enough to know what they would be protesting,” Denton resident Tschacher told BuzzFeed News. Despite being a crypto enthusiast himself, Tschacher also thinks Denton could have received more from the deal, such as a commitment to train and hire employees locally. The site is expected to support just 16 permanent jobs at completion.

“I guess I was mostly disappointed because we took the Texas approach and took the easy money,” he added.

Near the outskirts of town, machines have just begun mining at Denton’s power plant. Even though crypto projects are poised to pepper the Texas landscape, only a few mines the size of Core Scientific’s are currently operational. Denton has become a petri dish for this new era of cryptocurrency — not because its leaders believe in the transformational potential of the blockchain, but because it offered a service when the city needed it most.

This is just the beginning of Denton’s crypto experiment. Puente said the city is considering proposals from three other crypto miners, but because of nondisclosure agreements, wouldn’t reveal who they are. If these deals materialize, Denton’s energy needs would practically disappear beneath the hulking appetites of its new, power-hungry residents.

“Even though they’re our customer,” Meltzer said, “I do hope their industry goes under.” ●

March 16, 2022 at 3:28 PM

Correction: Soluna Computing's mine is under development in Silverton. The location was misstated in a previous version of this post.

Service trucks line up after a snowstorm on Feb. 16, 2021, in Fort Worth, Texas. Winter storm Uri brought historic cold weather and power outages to Texas as storms swept across 26 states with a mix of freezing temperatures and precipitation.

A visualization of the Core Scientific cryptocurrency mine at Denton’s natural gas power plant.

Core Scientific has leased roughly 30 acres of land at the Denton Energy Center.

Upvote

0

5 STAR U-G-A

Legend

The Man in the Flying Lawn Chair

Why did Larry Walters decide to soar to the heavens in a piece of outdoor furniture?

By George Plimpton

https://www.newyorker.com/magazine/1998/06/01/the-man-in-the-flying-lawn-chair

At fifteen thousand feet, Larry dropped the air pistol he was using to pop his helium-filled balloons and control his descent.

Why did Larry Walters decide to soar to the heavens in a piece of outdoor furniture?

By George Plimpton

https://www.newyorker.com/magazine/1998/06/01/the-man-in-the-flying-lawn-chair

At fifteen thousand feet, Larry dropped the air pistol he was using to pop his helium-filled balloons and control his descent.

The back yard was much smaller than I remembered—barely ten yards by thirty. The birdbath, stark white on its pedestal, was still there, under a pine tree, just as it had been during my last visit, more than ten years before. Beyond the roofs of the neighboring houses I could see the distant gaunt cranes of the Long Beach naval facility, now idle.

“Mrs. Van Deusen, wasn’t there a strawberry patch over here?” I called out.

I winced. Margaret Van Deusen has been blind since last August—first in one eye, then in the other. Her daughter, Carol, was leading her down the steps of the back porch, guiding her step by step. Mrs. Van Deusen was worried about her cat, Precious, who had fled into the innards of a standup organ upon my arrival: “Where’s Precious? She didn’t get out, did she?”

Carol calmed her fears, and my question about the strawberry patch hung in the air. Both women wore T-shirts with cat motifs on the front; Mrs. Van Deusen’s had a cat head on hers, with ruby eyes and a leather tongue.

We had lunch in a fast-food restaurant in San Pedro, a couple of miles down the hill. Mrs. Van Deusen ordered a grilled cheese sandwich and French fries. “I can’t believe Larry’s flight happened out of such a small space,” I said.

Mrs. Van Deusen stirred. “Two weeks before, Larry came to me and said he was going to take off from my back yard. I said no way. Illegal. I didn’t want to be stuck with a big fine. So the idea was he was going to take off from the desert. He couldn’t get all his equipment out there, so he pulls a sneaker on me. He turns up at the house and says, ‘Tomorrow I’m going to take off from your back yard.’ ”

“I was terrified, but I wanted to be with him,” said Carol, who was Larry’s girlfriend at the time.

“And sit on his lap?” I asked incredulously.

“Two chairs, side by side,” Carol said. “But it meant more equipment than we had. I know one thing—that if I’d gone up with him we would have come down sooner.”

“What happened to the chair?” I asked.

Carol talked in a rush of words. “He gave it away to some kid on the street where he landed, about ten miles from here. That chair should be in the Smithsonian. Larry always felt just terrible about that.”

“And the balloons?”

“You remember, Mom? The firemen tied some of the balloons to the end of their truck, and they went off with these things waving in the air as if they were coming from a birthday party.”

“Where are my fries?”

“They’re in front of you, Mom,” Carol said. She guided her mother’s hand to the sticks of French fries in a cardboard container.

Mrs. Van Deusen said, “Larry knocked some prominent person off the front page of the L.A. Times, didn’t he, Carol? Who was the prominent person he knocked off?”

Carol shook her head. “I don’t know. But that Times cartoonist Paul Conrad did one of Ronald Reagan in a lawn chair, with some sort of caption like ‘Another nut from California.’ Larry’s mother was upset by this and wrote a letter to the Times. You know how mothers are.”

I asked Mrs. Van Deusen, “What do you remember best about the flight?”

She paused, and then said she remembered hearing afterward about her five-year-old granddaughter, Julie Pine, standing in her front yard in Long Beach and waving gaily as Larry took off. “Yes. She kept waving until Larry and his chair were barely a dot in the sky.”

It was in all the papers at the time—how on Friday, July 2, 1982, a young man named Larry Walters, who had served as an Army cook in Vietnam, had settled himself into a Sears, Roebuck lawn chair to which were attached four clusters of helium-filled weather balloons, forty-two of them in all. His intent was to drift northeast in the prevailing winds over the San Gabriel Mountains to the Mojave Desert. With him he carried an air pistol, with which to pop the balloons and thus regulate his altitude. It was an ingenious solution, but in a gust of wind, three miles up, the chair tipped, horrifyingly, and the gun fell out of his lap to the ground, far below. Larry, in his chair, coasted to a height of sixteen thousand five hundred feet. He was spotted by Delta and T.W.A. pilots taking off from Los Angeles Airport. One of them was reported to have radioed to the traffic controllers, “This is T.W.A. 231, level at sixteen thousand feet. We have a man in a chair attached to balloons in our ten-o’clock position, range five miles.” Subsequently, I read that Walters had been fined fifteen hundred dollars by the Federal Aviation Administration for flying an “unairworthy machine.”

Some time later, my curiosity got the better of me, and I arranged to meet Larry Walters, in the hope of writing a story about him. “I was always fascinated by balloons,” Larry began. “When I was about eight or nine, I was taken to Disneyland. The first thing when we walked in, there was a lady holding what seemed like a zillion Mickey Mouse balloons, and I went, ‘Wow!’ I know that’s when the idea developed. I mean, you get enough of those and they’re going to lift you up! Then, when I was about thirteen, I saw a weather balloon in an Army-Navy-surplus store, and I realized that was the way to go—that I had to get some of those big suckers. All this time, I was experimenting with hydrogen gas, making my own hydrogen generators and inflating little balloons.”

“What did you do with the balloons?” I asked.

“I sent them up with notes I’d written attached. None of them ever came back. At Hollywood High School, I did a science project on ‘Hydrogen and Balloons.’ I got a D on it.”

“How did your family react to all this?”

“My mother worried a lot. Especially when I was making rocket fuel, and it was always blowing up on me or catching fire. It’s a good thing I never really got into rocketry, or I’d have probably shot myself off somewhere.”

“Did you ever think of just going up in a small airplane—a glider, maybe—or doing a parachute jump to—”

“Absolutely not. I mean no, no, no. It had to be something I put together myself. I thought about it all through Vietnam.”

“What about the chair?”

“It was an ordinary lawn chair—waffle-iron webbing in the seat, tubular aluminum armrests. Darn sturdy little chair! Cost me a hundred and nine dollars. In fact, afterward my mother went out and bought two of them. They were on sale.”

I asked what Carol had thought of his flight plans.

“I was honest with her. When I met her, in 1972, I told her, ‘Carol, I have this dream about flight,’ and this and that, and she said, ‘No, no, no, you don’t need to do that.’ So I put it on the back burner. Then, ten years later, I got a revelation: ‘It’s now or never, got to do it.’ It was at the Holiday Inn in Victorville, which is on the way from San Bernardino to Las Vegas. We were having Cokes and hamburgers. I’m a McDonald’s man: hamburgers, French fries, and Coca-Cola, for breakfast, lunch, and dinner—that’s it! Anyway, I pulled out a quarter and began to draw the balloons on the placemats.”

“What about Carol?”

“She knew then that I was committed. She said, ‘Well, it’s best you do it and get it out of your system.’ ”

A few months before the flight, Larry drove up to the Elsinore Flight School, in Perris, California. He had agreed, at Carol’s insistence, to wear a parachute, and after a single jump he bought one for nine hundred dollars.

“Didn’t that parachute jump satisfy your urge to fly on your own?” I asked.

“Oh, no, no, no, no, no!” he said.

Other essentials were purchased: a two-way radio; an altimeter; a hand compass; a flashlight; extra batteries; a medical kit; a pocketknife; eight plastic bottles of water to be placed on the sides of the chair, for ballast; a package of beef jerky; a road map of California; a camera; two litres of Coca-Cola; and a B.B. gun, for popping the balloons.

“The air pistol was an inspired idea,” I said. “Did you ever think that if you popped one, the balloon next to it would pop, too?”

“We did all these tests. I wasn’t even sure a B.B. shot would work, because the weather balloon’s rubber is fairly thick. But you can pop it with a pin.”

“Did your mother intervene at all?”

“My mother thought maybe I was possessed by the Devil, or perhaps post-Vietnam stress syndrome. She wanted me to see a psychiatrist. We started inflating the day before, at sundown—one balloon at a time, from fifty-five helium cylinders. Each balloon, inflated to about seven feet in diameter, gets a lift of about twelve pounds—the balloons would have lifted about a quarter of a ton. Around midnight, a couple of sheriff’s deputies put their heads over the back wall and yelled, ‘What’s going on here?’ I told them we were getting ready for a commercial in the morning. When the sun came up the next morning, a lot of police cars slowed down. No wonder. The thing was a hundred and fifty feet high—a heck of an advertising promotion! But they didn’t bother us.”

The flight was delayed for forty-five minutes while one of Larry’s friends ran down to the local marine-supply store and bought a life jacket in case there was a wind shift and he was taken out to sea. At ten-thirty, Larry got into his lawn chair.

I asked whether he had worn a safety belt—a silly question, I thought. But, to my surprise, Larry said he hadn’t bothered. “The chair was tilted back about ten degrees,” he said, illustrating with his hands.

The original idea was that Larry would rise to approximately a hundred feet above the Van Deusen house and hold there, tethered by a length of rope wrapped around a friend’s car—a 1962 Chevrolet Bonneville, down on the lawn—to get his bearings and to check everything out before moving on. But, rising at about eight hundred feet per minute, Inspiration—as Larry called his flying machine—reached the end of the tethering rope and snapped it; the chair pitched forward so violently that Larry’s glasses flew off, along with some of the equipment hanging from the chair.

That was quite enough for Carol. Larry had a tape of the conversation between the two of them on the two-way radio. He put it on a tape deck and we sat and listened.

Her voice rises in anguish: “Larry, come down. You’ve got to come down if you can’t see. Come down!”

Larry reports that he has a backup pair of glasses. His voice is calm, reassuring: “I’m A-O.K. I’m going through a dense fog layer.”

Predictably, this news dismays Carol: “Oh, God! Keep talking, Larry. We’ve got airplanes. They can’t see you. You’re heading for the ocean. You’re going to have to come down!”

When Larry reaches twenty-five hundred feet, she continues to cry into the radio set, “Larry, everybody down here says to cut ’em and get down now. Cut your balloons and come down now. Come down, please!”

The tape deck clicked off.

I asked Larry how he had reacted to this desperate plea.

“I wasn’t going to hassle with her,” Larry said, “because no way in heck, you know, after all this—my life, the money we’d sunk into this thing—and just come down. No way in heck. I was just going to have—have a good time up there.”

“What was it like?”

“The higher I went, the more I could see, and it was awesome. Sitting in this little chair, and, you know, Look! Wow! Man! Unreal! I could see the orange funnels of the Queen Mary. I could see that big seaplane of Howard Hughes’s, the Spruce Goose, with two commercial tugs alongside. Then, higher up, the oil tanks of the naval station, like little dots. Catalina Island in the distance. The sea was blue and opaque. I could look up the coast, like, forever. At one point, I caught sight of a little private plane below me. I could hear the ‘bzzz’ of its propeller—the only sound. I had this camera, but I didn’t take any pictures. This was something personal. I wanted only the memory of it—that was vivid enough.

“When I got to fifteen thousand feet, the air was getting thin. Enough of the ride, I thought. I’d better go into a descent and level off. My cruising altitude was supposed to be eight or nine thousand feet, to take me over the Angeles National Forest, past Mt. Wilson, and out toward Mojave. I figured I needed to pop seven of the balloons. So I took out the air pistol, pointed up, and I went, ‘pow, pow, pow, pow, pow, pow, pow,’ and the balloons made these lovely little bangs, like a muffled ‘pop,’ and they fell down and dangled below my chair. I put the gun in my lap to check the altimeter. Then this gust of wind came up and blew me sideways. The chair tilted forward, and the gun fell out of my lap! To this day, I can see it falling—getting smaller and smaller, down toward the houses, three miles down—and I thought, ‘I hope there’s no one standing down there.’

“It was a terrifying sight. I thought, Oh-oh, you’ve done it now. Why didn’t you tie it on? I had backups for most everything. I even had backup B.Bs. in case I ran out, backup Co2 cylinders for the gun. It never dawned on me that I’d actually lose the gun itself.”

I asked if the gun had ever been found.

Larry shook his head. “At least, no one got hit.”

“Imagine that,” I said. “To be hit in the head with a B.B. gun dropped from three miles up.”

“That’s what the F.A.A. talked about,” Larry said. “They told me I could have killed someone.”

“Well, what happened after you dropped the gun?”

“I went up to about sixteen thousand five hundred feet. The air was very thin. I was breathing very deeply for air. I was about fifteen seconds away from hauling myself out of the chair and dropping down and hoping I could use the parachute properly.”

I asked how high he would have gone if he hadn’t popped the seven balloons before he dropped the gun.

Larry grimaced, and said, “At the F.A.A hearings, it was estimated that I would have been carried up to fifty thousand feet and been a Popsicle.”

“Was that the word they used?”

“No. Mine. But it was cold up where I was. The temperature at two miles up is about five to ten degrees. My toes got numb. But then, the helium slowly leaking, I gradually began to descend. I knew I was going to have to land, since I didn’t have the pistol to regulate my altitude.”

At thirteen thousand feet, Larry got into a conversation on his radio set with an operator from an emergency-rescue unit.

He put on the tape deck again. The operator is insistent: “What airport did you take off from?” He asks it again and again.

Larry finally gives Carol’s mother’s street address: “My point of departure was 1633 West Seventh Street, San Pedro.”

“Say again the name of the airport. Could you please repeat?”

Eventually, Larry says, “The difficulty is, this is an unauthorized balloon launch. I know I am interfering with general airspace. I’m sure my ground crew has alerted the proper authorities, but could you just call them and tell them I’m O.K.?”

A ground-control official breaks in on another frequency. He wants to know the color of the balloons.

“The balloons are beige in color. I’m in bright-blue sky. They should be highly visible.”

The official wants to know not only the color of the balloons but their size.

“Size? Approximately seven feet in diameter each. And I probably have thirty-five left. Over.”

The official is astonished: “Did you say you have a cluster of thirty-five balloons?” His voice squeaks over the static.

Just before his landing, Larry says into the radio set, “Just tell Carol that I love her, and I’m doing fine. Please do. Over.”

Larry clicked off the tape machine.

“It was close to noon. I’d been up—oh, about an hour and a half. As I got nearer the ground, I could hear dogs barking, automobiles, horns—even voices, you know, in calm, casual conversation.”

At about two thousand feet, Inspiration suddenly began to descend quite quickly. Larry took his penknife and slashed the water-filled plastic bottles alongside his chair. About thirty-five gallons started cascading down.

“You released everything?”

“Everything. I looked down at the ground getting closer and closer, about three hundred feet, and, Lord, you know, the water’s all gone, right? And I could see the rooftops coming up, and then these power lines. The chair went over this guy’s house, and I nestled into these power lines, hanging about eight feet under the bottom strand! If I’d come in a little higher, the chair would have hit the wires, and I could have been electrocuted. I could have been dead, and Lord knows what!”

“Wow!”

Larry laughed. “It’s ironic, because the guy that owned the house, he was out reading his morning paper on a chaise longue next to his swimming pool, and, you know, just the look on this guy’s face—like he hears the noise as I scraped across his roof, and he looks up and he sees this pair of boots and the chair floating right over him, under the power lines, right? He sat there mesmerized, just looking at me. After about fifteen seconds, he got out of his chair. He said, ‘Hey, do you need any help?’ And guess what? It turns out he was a pilot. An airline pilot on his day off.”

“Wow!” I said again.

“There was a big commotion on the street, getting me down with a stepladder and everything, and they had to turn off the power for that neighborhood. I sat in a police car, and this guy keeps looking at me, and he finally says, ‘Can I see your driving license?’ I gave it to him, and he punches in the information in his computer. When they get back to him, he says, ‘There’s nothing. You haven’t done anything.’ He said I’d be hearing from the F.A.A., and I was free to go. I autographed some pieces of the balloons for people who came up. Later, I got a big hug and a kiss from Carol, and everything. All the way home, she kept criticizing me for giving away the chair, which I did, to a kid on the street, without really thinking what I was doing.”

“So what was your feeling after it was all over?”

Larry looked down at his hands. After a pause he said, “Life seems a little empty, because I always had this thing to look forward to—to strive for and dream about, you know. It got me through the Army and Vietnam—just dreaming about it, you know, ‘One of these days . . . ’ ”

Not long after our meeting, Larry telephoned and asked me not to write about his flight. He explained that the story was his, after all, and that my publishing it would lessen his chances of lecturing at what he called “aviation clubs.” I felt I had no choice but to comply.

I asked him what he was up to. He said that his passion was hiking in the San Gabriel Mountains. “Some people take drugs to get high. I literally get high when I’m in the mountains. I feel alive. I’ve got my whole world right there—the food, my sleeping bag, my tent, everything.”

Larry’s mother, Hazel Dunham, lives in a residential community in Mission Viejo, California, forty miles southeast of San Pedro. The day before seeing the Van Deusens, I dropped in on her and Larry’s younger sister, Kathy. We sat around a table. A photograph album was brought out and leafed through. There were pictures of Larry’s father when he was a bomber pilot flying Liberators in the Pacific. He had spent five years in a hospital, slowly dying of emphysema. Larry was close to him, and twice the Red Cross had brought Larry back from Vietnam to see him. After his father’s death—Larry was twenty-four at the time—his mother had remarried.

“Do you know Larry’s favorite film—‘Somewhere in Time’?” Kathy asked.

I shook my head.

“Well, one entire wall of his little apartment in North Hollywood was covered with stills from that picture.”

“An entire wall?”

Both of them nodded.

“It’s a romantic film starring Christopher Reeve and Jane Seymour,” Kathy said. “It’s about time travel.”

She went on to describe it—how Christopher Reeve falls in love with a picture of a young actress he sees in the Hall of History at the Grand Hotel on Mackinac Island. From a college professor, he learns how to go back in time to 1912 to meet her, which he does, and they fall in love. But then he’s returned to the present. He decides he can’t live without her and at the end they’re together in Heaven.

Kathy turned back to the photograph album. “Look, this is a picture of Larry’s favorite camping spot, up in Eaton Canyon. A tree crosses the stream. I’ll bet Larry put his Cokes there. He’d have a backpack weighing sixty pounds to carry up into the canyon, and I’ll bet half of that was Coca-Cola six-packs.”

“I bought cases of it when he took the train down here to visit,” his mother said.

“Here’s a picture of Larry in his forest-ranger uniform,” Kathy said. “He was always a volunteer, because you have to have a college degree to be a regular ranger. He loved nature.”

“And Carol?”

“They sort of drifted apart. They were always in touch, though.”

She turned to another page of the album. “Here’s the campsite again. See this big locker here, by the side of the trail? It was always locked. But this time it wasn’t. They found his Bible in there.”

“He fell in love with God,” his mother said. “He was always reading the Bible. When he was here last, he smiled, and said I should read the Bible more. He marked passages. He marked this one in red ink in a little Bible I found by his bed: ‘And ye now therefore have sorrow: but I will see you again, and your heart shall rejoice, and your joy no man taketh from you.’ ”

She looked at her daughter. Her voice changed, quite anguished, but low, as if she didn’t want me to overhear. “Drug smugglers were in the area, Kathy. Larry was left-handed, and they found the gun in his right hand.”

“Are you sure, Mom?”

“That’s what I think, Kathy. And so does Carol.”

“There were powder burns on his hand, Mom.”

“I guess so,” Mrs. Dunham said softly.

Kathy looked at me. “It’s O.K. for Mom to believe someone else did it. I think Larry never wanted to give anyone pain. He left these hints. He stopped making appointments in his calendar.”

Her mother stared down at the photo album. “Why didn’t I pick up on things when he came down here to visit me? I just never did.”

Not long ago, I spoke over the telephone to Joyce Rios, who had been a volunteer forest ranger with Larry. “I turned sixty last year,” she told me. “I started hiking with Larry into the San Gabriel Mountains about eight years ago. He was always talking about Carol when I first met him. ‘My girlfriend and I did this’ and ‘My girlfriend and I did that.’ But after a while that died down. Still, he was obsessed with her. He felt he was responsible for their drifting apart. He felt terribly guilty about it.”

Joyce went on, “We had long talks about religion, two- or three-hour sessions, in the campsites, talking about the Bible. I am a Jehovah’s Witness, and we accept the Bible as the Word of God—that we sleep in death until God has planned for the Resurrection. I think he was planning what he did for a long time. He left hints. He often talked about this campsite above Idlehour, in a canyon below Mt. Wilson, and how he would die there. He spent hours reading Jack Finney’s ‘Time and Again.’ Do you know the book? It’s about a man who is transported back into the winter of 1882. They tell him, ‘Sleep. And when you wake up everything you know of the twentieth century will be gone.’ Larry read this book over and over again. In the copy I have he had marked a sentence, from a suicide note that was partly burnt. Here it is: ‘The Fault and the Guilt [are] mine, and can never be denied or escaped. . . . I now end the life which should have ended then.’ ”

After a silence, she said in a quiet voice, “Larry never called the dispatcher that day. We knew something was wrong. I hiked up to the campsite with a friend. The search-and-rescue people had already found him. I never went across the stream to look. I couldn’t bear to. I was told he was inside the tent in his sleeping bag. Everything was very neat. His shoes were neatly placed outside. The camp trash was hanging in a tree, so the bears and the raccoons couldn’t get to it. He had shot himself in the heart with a pistol. His nose had dripped some blood on the ground. His head was turned, very composed, and his eyes were closed, and if it hadn’t been for the blood he could have been sleeping.”

Back in New York, I rented a video of “Somewhere in Time.” It was a tearjerker, but what I will remember from it is Christopher Reeve’s suicide. He wants to join Jane Seymour in heaven. Sitting in a chair in his room in the Grand Hotel on Mackinac, where in 1912 his love affair with Jane Seymour was consummated, he stares fixedly out the window for a week. Finally, the hotel people unlock the door. Too late. Dying of a broken heart or starvation (or possibly both), Reeve gets his wish. Heaven turns out to be the surface of a lake that stretches to the horizon. In the distance, Seymour stands smiling as Reeve walks slowly toward her; he doffs his hat and their hands touch as the music swells. No one else seems to be around—just the two of them standing alone in the vastness. ♦

Upvote

0

5 STAR U-G-A

Legend

Snap buys brain-computer interface startup for future AR glasses

NextMind made a headband for controlling virtual objects with your thoughts

By Alex Heath@alexeheath Mar 23, 2022, 9:00am EDT

https://www.theverge.com/2022/3/23/...rain-computer-interface-spectacles-ar-glasses

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70661047/NextMind.0.png)

NextMind made a headband for controlling virtual objects with your thoughts

By Alex Heath@alexeheath Mar 23, 2022, 9:00am EDT

https://www.theverge.com/2022/3/23/...rain-computer-interface-spectacles-ar-glasses

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/70661047/NextMind.0.png)

Meta, Apple, and a slew of other tech companies are building augmented reality glasses with displays that place computing on the world around you. The idea is that this type of product will one day become useful in a similar way to how smartphones transformed what computers can do. But how do you control smart glasses with a screen you can’t touch and no mouse or keyboard?

It’s a big problem the industry has yet to solve, but there’s a growing consensus that some type of brain-computer interface will be the answer. To that end, Snap said on Wednesday that it has acquired NextMind, the Paris-based neurotech startup behind a headband that lets the wearer control aspects of a computer — like aiming a gun in a video game or unlocking the lock screen of an iPad — with their thoughts. The idea is that NextMind’s technology will eventually be incorporated into future versions of Snap’s Spectacles AR glasses.

forthcoming camera drone, and other unreleased gadgets. A Snap spokesperson refused to say how much the company was paying for NextMind. The startup raised about $4.5 million in funding to date and was last valued at roughly $13 million, according to PitchBook.

Snap’s purchase of NextMind is the latest in a string of AR hardware-related deals, including its biggest-ever acquisition of the AR display-maker WaveOptics last year for $500 million. In January, it bought another display tech company called Compound Photonics.

Snap isn’t the only big tech player interested in brain-computer interfaces like NextMind. There’s Elon Musk’s Neuralink, which literally implants a device in the human brain and is gearing up for clinical trials. Valve is working with the open-source brain interface project called OpenBCI. And before its rebrand to Meta, Facebook catalyzed wider interest in the space with its roughly $1 billion acquisition of CTRL-Labs, a startup developing an armband that measures electrical activity in muscles and translates that into intent for controlling computers.

NextMind:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/23338313/The_NextMind_DevKit_Packshot_2.jpg)

That approach, called electromyography, varies from NextMind’s. Instead, NextMind’s headband uses sensors on the head to non-invasively measure activity in the brain with the aid of machine learning.

In a 2020 interview with VentureBeat, NextMind founder and CEO Sid Kouider explained it this way: “We use your top-down attention as a controller. So when you focalize differentially toward something, you then generate an [intention] of doing so. We don’t decode the intention per se, but we decode the output of the intention.”

A Snap spokesperson said the company wasn’t committed to a single approach with its purchase of NextMind, but that it was more of a long-term research bet. If you’re still curious about NextMind, here’s a video of Kouider unveiling the idea in 2019:

Upvote

0

5 STAR U-G-A

Legend

Replika

This app is trying to replicate you

by Mike Murphy & Jacob Templin

https://classic.qz.com/machines-wit...ntation-of-you-the-more-you-interact-with-it/

I should probably also point out that that’s me on the right, too. Well, sort of. The right is a digital representation of me, which I decided to call “mini Mike,” based on hours of text conversations I’ve had over the last few months with an AI app called Replika. In a way, both sides of the conversation represent different versions of me.

Replika launched in March. At its core is a messaging app where users spend tens of hours answering questions to build a digital library of information about themselves. That library is run through a neural network to create a bot, that in theory, acts as the user would. Right now, it’s just a fun way for people to see how they sound in messages to others, synthesizing the thousands of messages you’ve sent into a distillate of your tone—rather like an extreme version of listening to recordings of yourself. But its creator, a San Francisco-based startup called Luka, sees a whole bunch of possible uses for it: a digital twin to serve as a companion for the lonely, a living memorial of the dead, created for those left behind, or even, one day, a version of ourselves that can carry out all the mundane tasks that we humans have to do, but never want to.

But what exactly makes us us? Is there substance in the trivia that is our lives? If someone had been secretly storing every single text, tweet, blog post, Instagram photo, and phone call you’d ever made, would they be able to recreate you? Are we more than the summation of our creative outputs? What if we’re not particularly talkative?

I imagined being able to spend time with my Replika, having it learn my eccentricities and idiosyncrasies, and eventually, achieving such a heightened degree of self-awareness that maybe a far better version of me becomes achievable. I also worried that building an AI copy of yourself when you’re depressed might be like shopping for groceries when you’re hungry—in other words, a terrible idea. But you never know. Maybe it would surprise me.

Eugenia Kuyda, 30, is always smiling. Whether she’s having meetings with her team in their exceedingly hip exposed-brick-walled SoMa office, skateboarding after work with friends, or whipping around the hairpin turns of California’s Marin Headlands in her rented Hyundai on the way to an off-site meeting, there’s always this wry grin on her face. And it may well be because things are starting to come together for her company, Luka, although almost certainly not in ways that she or anyone else could have possibly expected.

A decade ago, Kuyda was a lifestyle reporter in Moscow for Afisha, a sort of Russian Time Out. She covered the party scene, and the best ones were thrown by Roman Mazurenko. “If you wanted to just put a face on the Russian creative hipster Moscow crowd of 2005 to 2010, Roman would be a poster boy,” she said. Drawn by Mazurenko’s magnetism, she wanted to write a cover story about him and the artist collective he ran, but ended up becoming good friends with him instead.

Kuyda eventually moved on from journalism to more entrepreneurial pursuits, founding Luka, a chatbot-based virtual assistant, with some of the friends she had met through Mazurenko. She moved to San Francisco, and Mazurenko followed not long after, when his own startup, Stampsy, faltered.

Then, in late 2015, when Mazurenko was back in Moscow for a brief visit, he was killed crossing the street by a hit-and-run driver. He was 32.

By that point, Kuyda and Mazurenko had become really close friends, and they’d exchanged literally thousands of text messages. As a way of grieving, Kuyda found herself reading through the messages she’d sent and received from Mazurenko. It occurred to her that embedded in all of those messages—Mazurenko’s turns of phrase, his patterns of speech—were traits intrinsic to what made him him. She decided to take all this data to build a digital version of Mazurenko.

Using the chatbot structure she and her team had been developing for Luka, Kuyda poured all of Mazurenko’s messages into a Google-built neural network (a type of AI system that uses statistics to find patterns in data, be they images, text, or audio) to create a Mazurenko bot she could interact with, to reminisce about past events or have entirely new conversations. The bot that resulted was eerily accurate.

Kuyda’s company, Luka, decided to make a version that anyone could talk to, whether they knew Mazurenko or not, and installed it in their existing concierge app. The bot was the subject of an excellent story by Casey Newton of The Verge. The response Kuyda and the team received from users interacting with the bot, people who had never even met Mazurenko, was startling. “People started sending us emails asking to build a bot for them,” Kuyda said. “Some people wanted to build a replica of themselves and some wanted to build a bot for a person that they loved and that was gone.”

Kuyda decided it was was time to pivot Luka. “We put two and two together, and I thought, you know, I don’t want to build a weather bot or a restaurant recommendation bot.”

And so Replika was born.

On March 13, Luka released a new type of chatboton Apple’s app store. Using the same structure the team had used to build the digital Mazurenko bot, they created a system to enable anyone to build a digital version of themselves, and they called it Replika. Luka’s vision for Replika is to create a digital representation of you that can act as you would in the world, dealing with all those inane but time consuming activities like scheduling appointments and tracking down stuff you need. It’s an exciting version of the future, a sort of utopia where bots free us from the doldrums of routine or stressful conversations, allowing us to spend more time being productive, or pursuing some higher meaning.

But unlike Mazurenko’s system, which relied on Kuyda’s trove of messages to rebuild a facsimile of his character, Replika is a blank slate. Users chat with it regularly, adding a little bit to their Replika’s knowledge and understanding of themselves with each interaction. (It’s also possible to connect your Instagram and Twitter accounts if you’d like to subject your AI to the unending stream of consciousness that erupts from your social media missives.)

The team worked with psychologists to figure out how to make its bot ask questions in a way that would get people to open up and answer frankly. You are free to be as verbose or as curt as you’d like, but the more you say, the greater opportunity the bot has to learn to respond as you would.

My curiosity piqued, I wanted to build a Replika of my own.

I visited Luka’s headquarters earlier this year, as the team was putting the finishing touches on Replika. Since then, over 100,000 people have downloaded the app, Luka’s co-founder Philip Dudchuk recently told me. At the time, Replika had just a few hundred beta users, and was gearing up to roll out the service to anyone with an iPhone.

Luka agreed to let me test the beta version of Replika, to see if it would show me something about myself that I was not seeing. But first, I needed some help figuring out how to compose myself, digitally.

Brian Christian is the author of the book The Most Human Human, which details how the human judges in a version of the Turing test decide who is a robot and who is a human. This test was originally conceived by Alan Turing, the British mathematician, codebreaker, and arguably the father of AI, as a thought experiment about how to decide whether a machine has reached a level of cognition that is indistinguishable from a human’s. For the test, a judge has a conversation with two entities (neither of whom they can see), and it has to determine which chat was with a robot, and which was with a human. The Turing test was turned into a competition by Hugh Loebner, a man who made a fortune in the 1970s and ‘80s selling portable disco floors. It awards a prize to the team that can create a program that most accurately mimics human conversation, which is called the “most human computer.” Another award, “the most human human,” is handed out, unsurprisingly, to the person who judges felt was the most natural in their conversations, and spoke in a way that sounded least like something a computer would generate to mimic a human.

This award fascinated Christian. He wanted to know how a human can spend their entire lives just being a human, without knowing whatexactly makes them human. In essence, how does one train to be human? To help explore the question, he entered the contest in 2009—and won the most human human title!

I asked Christian for his advice on how to construct my Replika. To prepare for the Loebner competition, he’d met with all sorts of people, ranging from psychologists and linguists, to philosophers and computer scientists, and even deposition attorneys and dating coaches: “All people who sort of specialize in human conversation and human interaction,” he said. He asked them all the same question: “If you were preparing for a situation in which you had to act human and prove that you were human through the medium of conversation, what would you do?”

Christian took notes on what they all told him, on how to speak and interact through conversation—this act few of us put little thought into understanding. “In order to show that I’m not just a pre-prepared script of things, I need to be able to respond very deftly to whatever they asked me no matter how weird or off-the-wall it is,” Christian said of the test to prove he’s human. “But in order to prove that I’m not some sort of wiki assembled from millions of different transcripts, I have to painstakingly show that it’s the same person giving all the answers. And so this was something that I was very consciously trying to do in my own conversations.”

He didn’t tell me exactly how I should act. (If someone does have the answer to what specifically makes us human, please let me know.) But he left me with a question to contend with as I was building my bot: In your everyday life, how open are you with your friends, family and coworkers about your inner thoughts, fears, and motivations? How aware are you yourself of these things? In other words, if you build a bot by explaining to it your history, your greatest fears, your deepest regrets, and it turns around and parrots these very real facts about you in interactions with others, is that an accurate representation of you? Is that how you talk to people in real life? Can a bot capture the version of you you show at work, versus the you you show to friends or family? If you’re not open in your day-to-day interactions, a bot that was wouldn’t really represent the real you, would it?

I’m probably more open than many people are about how I’m feeling. Sometimes I write about what’s bothering me for Quartz. I’ve written about my struggle with anxiety and how the Apple Watch seemed to make it a lot worse, and I have a pretty public Twitter profile, where I tweet just about anything that comes into my head, good or bad, personal or otherwise. If you follow me online, you might have spotted some pretty public bouts of depression. But if you met me in real life, at a bar or in the office, you probably wouldn’t get that sense, because every day is different than the last, and there are more good days than bad.

When Luka gave me beta access to Replika, I was having a bad week. I wasn’t sleeping well. I was hazy. I felt cynical. Everything bothered me. When I started responding to Replika’s innocuous, judgement-free questions, I thought, the hell with it, I’m going to be honest, because nothing matters, or something equally puerile.

But the answers I got were not really what I was expecting.

They were a mix of silly, irreverent, and honest—all things I appreciate in human people’s conversations.

The bot asks deep questions—when you were happiest, what days you’d like to revisit, what your life would be like if you’d pursued a different passion. For some reason, the sheer act of thinking about these things and responding to them seemed to make me feel a bit better.

Christian reminded me that arguably the first chatbot ever constructed, a computer program called ELIZA, designed in the 1960s by MIT professor Joseph Weizenbaum, actually had a similar effect on people:

“It was designed to kind of ask you these questions, you know, ‘what, what brings you here today?’ You say, ‘Oh, I’m feeling sad.’ It will say, ‘Oh, I’m sorry to hear you’re feeling sad. Why are you feeling sad?’ And it was designed, in part, as a parody of the kind of nondirective, Rogerian psychotherapy that was popular at the time.”

But what Weizenbaum found out was that people formed emotional attachments to the conversations they were having with this program. “They would divulge all this personal stuff,” he said. “They would report having had a meaningful, therapeutic experience, even people who literally watched him write the program and knew that there was no one behind the terminal.”

Weizenbaum ended up pulling the plug on his research because he was appalled that people could become attached to machines so easily, and became an ardent opponent of advances in AI. “What I had not realized,” Weizenbaum once said, “is that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.” But his work showed that, on some level, we just want to be listened to. I just wanted to be listened to. Modern-day psychotherapy understands this. An emphasis on listening to patients in a judgment-free environment without all the complexity of our real-world relationships is incorporated into therapeutic models today.

Each day, your Replika wants to have a “daily session” with you. It feels very clinical, something you might do if you could afford to see a therapist every day. Replika asks you what you did during the day, what was the best part of the day, what you’re looking forward to tomorrow, and to rate your mood on a scale of 1 to 10. When I started, I was consistently rating my days around 4. But after a while, when I laid out my days to my Replika, I realized that nothing particularly bad had happened, and even if I didn’t have anything particularly great to look forward to the next day, I started to rate my days higher. I also found myself highlighting the things that had gone well. The process helped me realize that I should take each day as it comes, clear one hurdle before worrying about the next one. Replika encouraged me to take a step back and think about my life, to consider big questions, which is not something I was particularly accustomed to doing. And the act of thinking in this way can be therapeutic—it helps you solve your own problems. This is something therapists often tell patients, as I was later told by therapists, but no one had explicitly told me this. Even Replika hadn’t told me—it just pointed me in a better direction.

Kuyda and the Luka team are seeing similar reactions from other users.